Table of contents

- What is a Creative and what does Creative Testing mean?

- How does an ideal creative testing process work?

- Conclusion on Creative Testing

It's no secret anymore - Creative is currently the biggest lever we have in Social Performance Marketing. The best practices are to give Facebook's artificial intelligence the maximum amount of freedom. However, what is still firmly in our hands is the Creative.

What is a Creative and what does Creative Testing mean?

The Creative is the visual that is played out to users in the Facebook feed, in the Instagram feed and on all the other placements.

A few years ago, targeting via fine-grained settings in the Ads Manager would have worked, but now it works through the Creative itself. But how is that possible?

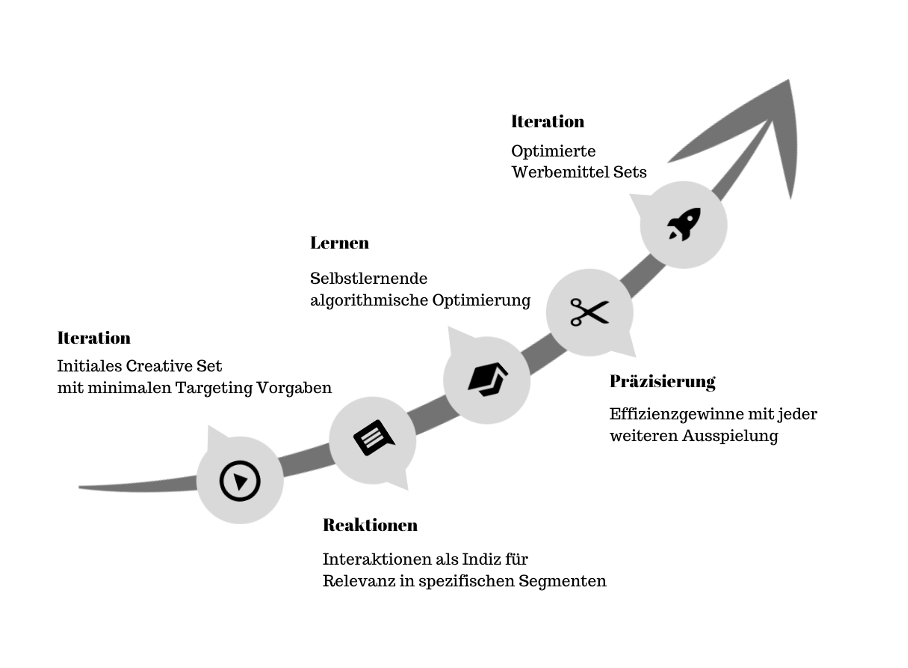

Once the creative starts being distributed, countless data points are collected. This can be a like, a comment, an interaction on the landing page, but even just expanding the Adcopy over the link 'read more'.

Based on these positive user signals, the algorithm learns which type of user the ad is relevant for. The more data collected, the more efficient the display becomes.

Creative Testing Process, own presentation

The path to performant campaigns is primarily about producing and distributing creatives and messages that are relevant to target groups. But how do you get to these Creatives?

The answer is obvious. You have to test, test, test....

And this is where the crux comes. What are the best-practices for creative testing? Should new creatives simply be turned on in existing ad groups? How much budget does each test get? Is an extra testing campaign necessary?

As you can see, testing is not a trivial topic and there are many ambiguities.

Below, our guest author Felix Morsbach guides you step by step through his creative testing strategy. If you are inexperienced in the field of Facebook Ads, feel free to look at the

OMR guide to Facebook Advertising.

Recommended social media software

On our comparison platform OMR Reviews you can find more recommended social media software.

We present over 100 solutions that are perfectly tailored to the needs of SMEs, start-ups and large corporations. Our platform supports you in all areas of social media management. Take the opportunity to compare different software and consult real user reviews to find the ideal solution for your requirements:

How does an ideal creative testing process work?

A test is by definition a method to check if a hypothesis is true.

I like to split a test, or a Creative Testing, into the following four components:

- Hypothesis of Creative Testing

- Measurable test run of Creative Testing

- Evaluation of Creative Testing

- Iteration of Creative Testing

In the following, we take a detailed look at these four components of a test.

1. Hypothesis of Creative Testing

A hypothesis is a reasoned assumption that a certain Creative Concept will produce certain results.

Example: A testimonial video ad creates massive social proof and reduces our acquisition costs by up to X%.

The hypothesis can of course be detailed further.

But how can such a hypothesis be derived?

Here it must be differentiated whether there is already a certain database or not.

If there is a database, certain trends can already be derived from the historical data. Which types of Creatives have worked particularly well? What was the artistic structure of these Creatives? What kind of messages were used within these Creatives?

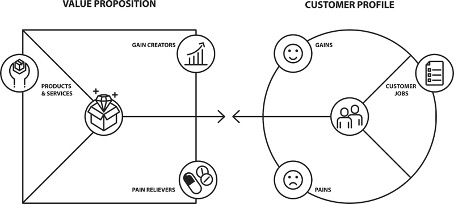

Furthermore, it makes sense to be aware of your own company's value proposition. You can use templates like the Value Proposition Canvas for this.

Value Proposition Principle, Source: Adobe Stock

A treasure trove of good messaging are real customer voices. Do you already have reviews on Trusted Shops, Amazon or Google Reviews?

Example review for a possible callout in the ad, Source: Trustpilot.com

If there are no such reviews, check out what content is in focus in topic-relevant forums or Reddit/Twitter threads.

2. Measurable test run of Creative Testing

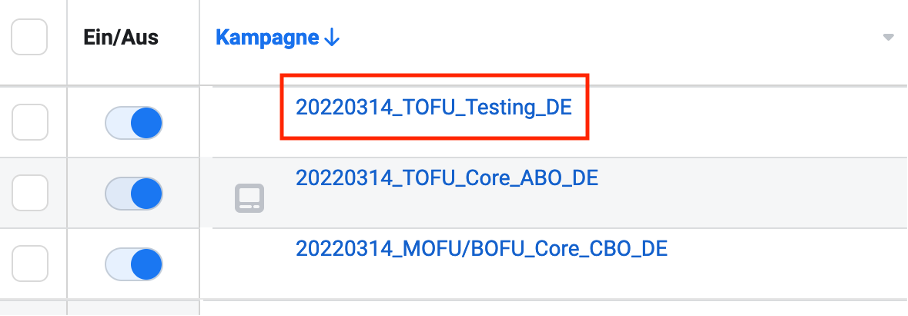

A dedicated Creative Testing campaign

In order to test cleanly, separate your testing setup from the core campaign setup.

The Core Setup, ideally, is significantly simplified according to the current best practices. In many cases, a prospecting (new customer) and a retargeting campaign are sufficient.

Source: Facebook Ads Manager

The Testing Campaign brings you the following advantages:

- You can cleanly separate the performance of the Testing Setup & the Core Setup.

- The Performance of the Core Setup is not brought down by the tests.

- You can cleanly distribute and rate tests.

With the Creative Testing campaign, use budget optimization at the ad group level (ABO).

Each Creative Test should run in a separate ad group. This way, you can ensure that sufficient budget can be allocated per test. Also, it's about being able to isolate the Learnings. Within the ad group, various variants of the Creative Concept to be tested should be stored. It is essential that only one element of the Creative changes at a time. This could be, for example, the thumbnail, the hook, or the text overlay.

Targeting

We use open targeting for each Creative Test. As mentioned at the beginning, the Creative finds its target group through design and approach. During this search, you should set as few restrictions as possible on the algorithm and let the data decide.

A second major advantage of distributing via an Open Targeting is scalability. If you test in very tight and proven target groups, you may be able to expect better results more quickly. However, you would be severely limiting yourself with this approach.

Target group tests in the classical sense via interests and lookalikes should be carried out in the Core Campaign Setup.

Budget

When it comes to Creative Testing, the budget question is one of the most frequently asked. The budget must always be allocated in relation to the acquisition costs. We go by a product with acquisition costs of 25 EUR as an example. The goal should be to theoretically achieve 50 conversions within 7 days.

So we calculate: 25 EUR * 50 Conversions = 1,250 EUR.

1,250 EUR would thus be our weekly budget. Now we divide by 7 to get the daily budget.

1.250/7 ~ 180 EUR

So we should start with 180 EUR per ad group in order to theoretically be able to achieve the 50 conversions per week. It cannot be said in general how much budget goes into testing. A good starting value is 20% of the prospecting budget. Depending on performance, the budget can then be adjusted upwards or downwards.

Disclaimer: I absolutely do not advocate having to finish the learning phase before making a statement about the quality of creatives. However, the learning phase can be used as a good indicator of when Facebook is talking about a certain validity.

Naming Convention

A strict naming convention is incredibly important for a clean evaluation in the next step.

In the long term, you should have the option to determine the winning combinations from Creative Concept, Message, Product and Elements across multiple tests.

Here are examples of Creative Concepts:

- Founder Video: The founder of the company appears in the ad and talks about the product/brand

- Testimonial Video: Real customers test and review the product on camera

- Unboxing Video: The product is unpacked and shown on camera

What are angles or approaches? The approach defines the way in which the target group is addressed. This way, ideally, sub-segments within the target group are filtered.

Imagine a handbag brand that sells handbags in the medium price range in their online store. The handbags are sustainably produced, and a special feature is the interchangeable flap.

Among other things, the following four approaches are conceivable:

- Focus on environmental consciousness

- Focus on veganism (no real leather)

- Focus on the practical interchangeable flaps

- Focus on the lifestyle factor

Concept and approach should be clear, but why is it important to include the product and the elements as well?

Not every Creative Concept works for the marketing of each product. The same applies to the approach. It is therefore important to be able to evaluate later which product the Test was run for.

Elements are for example hooks, thumbnails, design elements, text overlays or voice overs. They can be added and changed to modify the Creative Concept.

To be able to evaluate at any time and over several tests which combinations of Concept-Approach-Product-Element work particularly well, all these components must be taken into account in the Naming.

In which form and order these components are integrated into the Naming is secondary. The important thing is that they are separated by a defined symbol. This can be an underscore, quotation mark or even a '^'.

A template for naming the ad groups might look like this:

01TEST_Date_Audience_Placement_Product_Approach_Concept_Element_New/Iteration.

Filled out:

01TEST_220317_OpenTargeting_AutomaticPlacements_Handbag_Vegan_FounderVideo_NativeTextOverlay_Iteration

Using a unique numbering of the Tests in ascending order (in the example '01TEST'), you always have an overview of how much and in what order you have tested. At the end of the naming, I like to add the information whether it is a new Creative Concept ('New'), or an iteration stage of an already used Creative Concept ('Iteration').

3. Evaluation of Creative Testing

When the tests are running, the question naturally arises: How long does the test have to be conducted and which metrics do you need to look at?

Data issues triggered by iOS 14.5 and the like mean that data from users who have opted out on Apple smartphones is delivered up to 72 hours late (sometimes even later).

So it's best to look at the numbers for the tests after 3 days.

You will then usually face three different scenarios.

- Scenario 1: The results are very strong. The CPA (= acquisition costs) is below the average CPA.

- Scenario 2: The results are mediocre. The CPA is average or slightly below average. The On-Platform metrics look good.

- Scenario 3: The results are poor. Almost no to no conversion was achieved.

What metrics do we look at?

There are a number of metrics by which you can analyze the quality of a Creative. The decisive metric is usually of course the CPA. To analyze why the CPA might not be optimal, it helps to look at the On-Platform metrics.

A big advantage of the On-Platform metrics is that this data is generated on the platform itself. So here we have 100% of the data, no matter if Opt-Out or not.

The following metrics are interesting here, among others:

- Outgoing Link Click-through rate

- The proportion of users who have seen the ad and clicked on a link that leads to the target URL.

- Hook-Rate (3-sec. video views / impressions)

- The proportion of users who have seen the ad and stayed on it for at least 3 seconds.

- This is a custom metric that needs to be created manually.

- Hold-Rate (thruplays/Impressions)

- The proportion of users who have seen the ad and stayed on it for at least 15 seconds.

- Again, this is a custom metric.

What do we do with Winners?

When you have identified Winners, you scale them directly in the Testing Campaign. Here, you can increase the budget by 20% every 72 hours. You do this 2-3 rounds. If the performance continues to be strong, you've proven two things:

- The hypothesis is correct or the Creative works.

- The Creative is scalable.

Next, the Winning Creative is duplicated into the Core Campaign.

Very important: We never want to shut down anything that performs well. The ads remain active in the Testing Campaign. Only when the performance drops will the ads be turned off here.

What to do with average performance?

For average performance, you optimize at the ad level and then look to see what happens. You analyze the performance of the different variants in your Adset and turn off Poor-Performers.

Then, let the ad group continue to run and see how the performance develops when the other Creatives receive more spend.

As soon as the average CPA has been spent two to three times and no conversion has been achieved, you can safely turn off the ad. If no successful creative emerges from this approach, the entire ad group can be shut down.

What to do with poor performance

We all don't want to burn money. If the performance is subterranean after 3 days, you can confidently switch off the ad group.

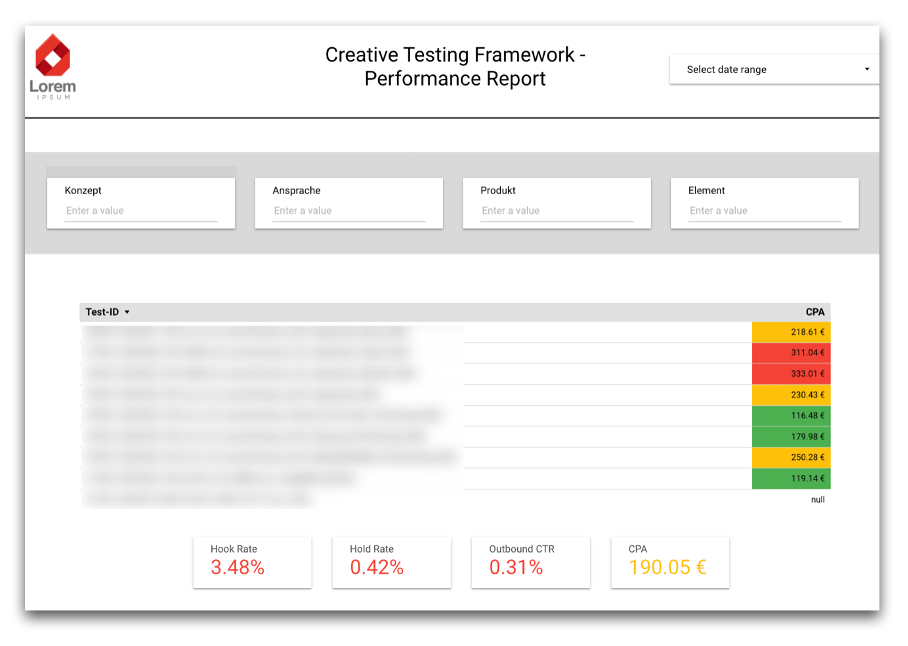

Cross-test analysis via Google Datastudio

This dashboard should show the performance of the individual ad groups. Due to the campaign structure, each ad group stands for a test. Through conditional formatting, you can put a traffic light system behind the most important metrics. This way, you can tell at a glance whether a test is running well (green), badly (red), or mediocre (yellow).

Dashboard example for monitoring Creative Testing, Source: Own Google Datastudio

Now comes the trick: Via Advanced Filters, you can filter for the individual components of the Naming. Through several of these filters, you can thus determine the performance of the combination of a certain approach and a certain Creative Concept from, for example, the last 50 run tests over several of these filters. This data is worth gold.

Depending on the tool, you can of course build in many other useful visualizations and filter options.

4. Iteration of Creative Testing

This part is often forgotten. Testing is pointless if the test results are not made usable. For this, you need to analyze the results of the tests and extract Next Steps from them.

But why is this necessary at all? When a Winning Creative has been identified, you can simply scale it vertically and done, right?

Unfortunately, something called“Ad Fatigue” gets in the way.

Example Facebook Ad, Source: Facebook.com

Ad Fatigue occurs when the Creative has been seen too often on average in the target audience.

When Ad Fatigue occurs, the Conversion Rate typically drops by up to 40% and the Acquisition Costs increase accordingly.

You can see the average impressions of your ad in the Ads Manager through the 'Frequency' metric. In Prospecting, a frequency of 1-1.5 over the last seven days is normal, and in Retargeting the frequency can be significantly higher.

But especially in Prospecting, you should take notice as soon as the frequency is over 2. Based on experience, an ad can still run stable even at an over-average high frequency. It only becomes really problematic when the frequency increases sharply within a short time. This then indicates that the algorithm can hardly find any more relevant users via the respective Creative and starts to speak to the same users again and again.

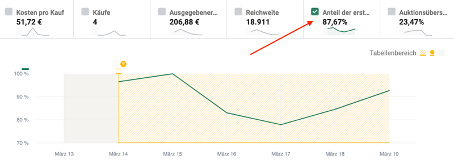

To observe this development over the course of time, take a look at the FTIR (= First Time Impression Ratio). You can either set up the First Time Impression Ratio as a Custom Metric (Reach / Impressions), or look at it through the 'Check' tool.

Example Performance of the First Impressions of an ad, Source: Facebook Ads Manager

What to do against Ad Fatigue?

Since Ad Fatigue occurs when the ad has been seen too often in the relevant target group, either a completely new ad needs to be put live or the previously functioning ad needs to be modified.

The goal should basically be to preempt Ad Fatigue and not even let the Conversion Rate drop. It is therefore important to continuously test Creatives.

If you have identified a successful Creative through testing and at some point it hits the limit of the target group, you can go into iteration and revive the Creative.

Here, the elements are crucial. If, for example, it is a Testimonial Video Ad, you can change the hook, choose a different thumbnail or even add a voice over. With such small changes, a different part of the target group is addressed and the proportion of First Time Impressions increases again. With these modifications, a Creative Concept can be scaled and the lifespan can be multiplied.

Interface between advertising and design = Performance Design

Creative Testing only works with a clean interface between design and advertising. Both teams should not operate in silos. A basic prerequisite for an efficient interlocking of both departments is that the designers understand the basics of advertising and the advertisers know about the relevance of the Creative.

As a rule, advertisers tend to look at results in the form of numbers and complex dashboards. To play the performance of the test results to the design team in as easy-to-understand and visual a form as possible, a tool like

Miro is suitable.

Miro is essentially a endlessly large digital whiteboard. It enables you to easily prepare the performance of the latest test results for designers and comment on them with simple visualization possibilities.

Visualization example using Miro, Source: Own Miro Board

Conclusion on Creative Testing

The automation of typical Media-Buyer tasks will continue to increase. Genuine marketing skills like empathy, target audience understanding & messaging are moving back into focus. Brands and Media Buyers should therefore focus on these skills. In order not to make decisions based on subjectivity, a data-driven Creative Testing Approach is essential.

Of course, many roads lead to Rome and you should reconsider your approaches over and over. The Creative Testing Framework presented here is currently the most effective path for me and my team.

It's important to mention: This approach really makes sense only from a 5-digit spend. For brands that have not yet reached this level, I recommend taking fresh Creatives live directly in the Core Campaign.