Table of contents

- Where do you find crawling errors in the Google Search Console and how do you detect them?

- Crawling Error Types

- Analysis of 404 errors

- Analysis of Crawling Problems with the URL Check Tool

- Conclusion

Crawling errors occur when Google bots cannot access individual URLs or your entire website. Causes can be faulty server settings, CMS errors or changes in the URL structure. With the Google Search Console, you can easily find and fix these crawling errors. What these crawling errors are, how you find them with the help of the Google Search Console and then how to fix them, you will learn in this article.

Where do you find crawling errors in the Google Search Console and how do you detect them?

As soon as you are logged into the

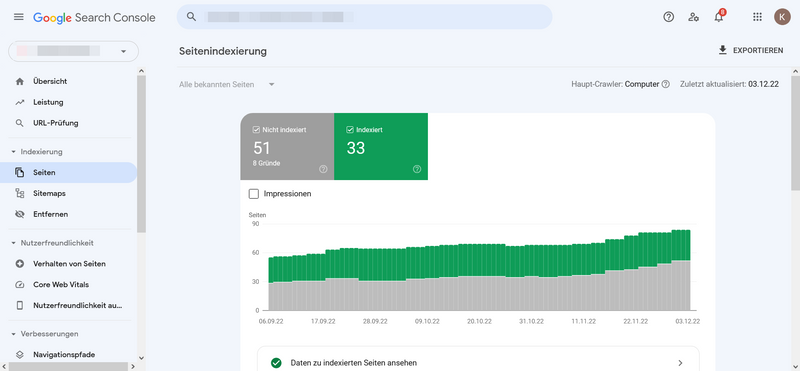

Google Search Console just click on pages under the section Indexation.

Page Indexation

The first evaluation shows how many of your pages are indexed and how many are not. If you scroll down, you will see a crawling error report listing the reasons why pages have not been indexed and how many pages are affected by this crawling error.

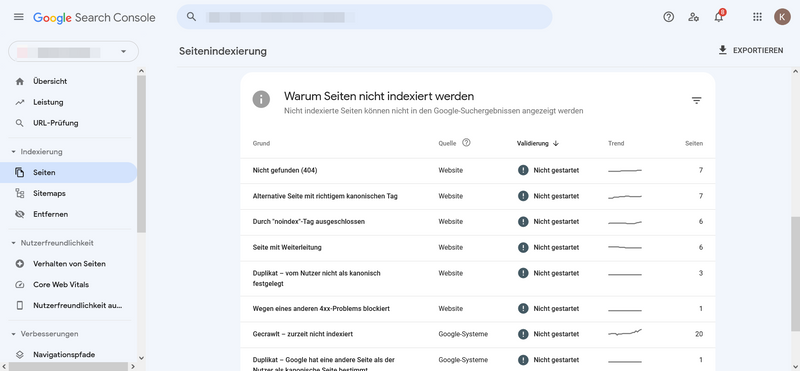

Overview of Crawling Error Report

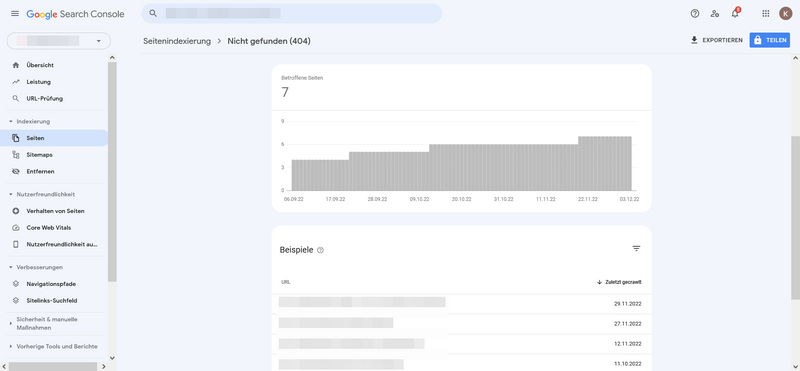

Now you can see all the affected URLs by clicking on the crawling error.

Crawling error all affected URLs

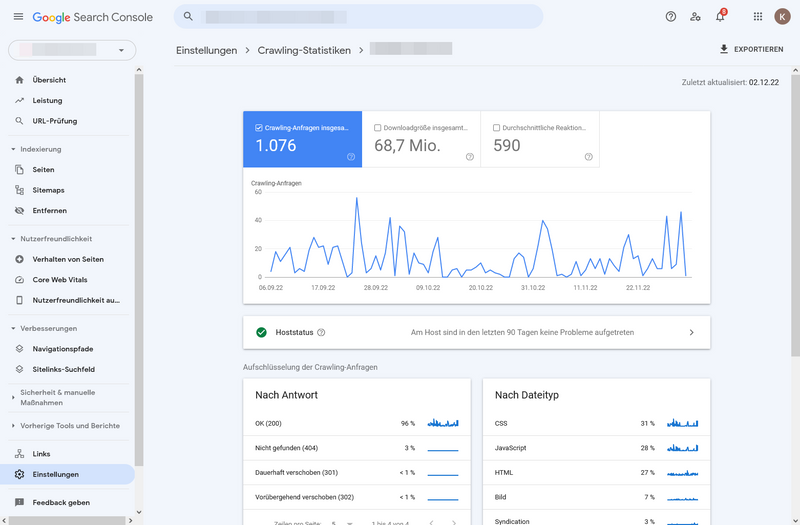

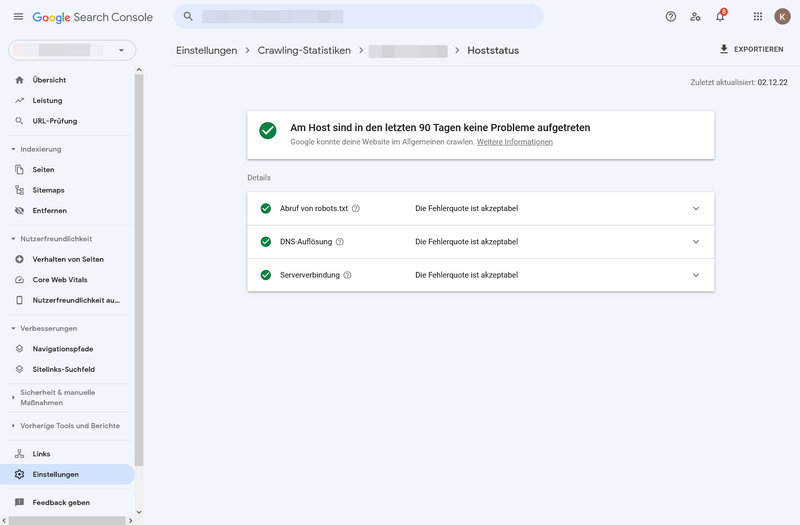

In the side menu under Settings, you also have the option to view your crawling statistics. All statistics always refer to the last 90 days.

Crawling Statistics under Settings

A green checkmark statis is very good because it means no serious problems have occurred in the past 90 days:

Host Status Overview

Crawling Error Types

There are two types of crawling errors that you have already seen in the previous section: Site errors and URL errors. Site errors are serious as they prevent your entire website from being crawled. This means your website will not be found in search engines. URL errors, on the other hand, are quite harmless. With URL errors, a specific page cannot be crawled. These are mostly crawling errors that can be easily fixed. Let's take a closer look at both types.

Site Error

DNS Error

DNS stands for Domain Name System. If the Google Search Console shows you this error, your website is not accessible. This can be a temporary disruption. In this case, the Google bots will crawl your website again after a while. If the problem persists, it is highly likely that your domain provider is responsible for the problem. Contact them to fix the problem.

Server Error

In case of a server error, your server takes too long to respond. The crawler tries to reach your website, but the load time is too long. Unlike the DNS Error, Google can theoretically access your website. A possible cause is the overload of your website due to too many simultaneous requests.

Robots Failure

This error occurs when the Google bots cannot reach the robots.txt file of your website. You only need this file if you want to exclude specific pages on your website from crawling. If the file is faulty and contains the line "disallow: /", it makes your entire website inaccessible to Google bots.

URL Error

Not found (404)

A URL included in your sitemap cannot be found on the web server. You have probably deleted the page or moved the URL.

Soft 404 error

Soft 404 errors occur, for example, when the page is accessible to Google bots, but contains very little content. Also, a redirect to a page that differs in theme from the previous one is classified by Google as a Soft 404 error.

Access Denied (403)

Google bots cannot access the content. This can have several causes: The page is blocked by your hosting provider or your robots.txt file, or you have determined that only registered users can access the page.

Blocked due to another 4xx problem

The server has encountered a 4xx error that is not covered by any other 4xx error described here. Use the URL checking tool to examine the page closer.

Redirect error

If you move or remove a page on your website, you should set up a 301 redirect. If you redirect multiple times or back to the original location, errors can occur.

Excluded by "noindex" tag

Your page was submitted for indexing, but contains a "noindex" instruction in a meta tag or the HTTP header. This sends contradicting signals to Google. If the page should be indexed, you just need to remove the "noindex" instruction.

Duplicate - not determined by user as canonical

The Google bot has found several versions of the page. None of the pages has a specific canonical tag. The Google bot decides not to see this page as the primary page and excludes it from indexing.

Duplicate - Google has determined a different page as the canonical page than the users

This error message is similar to the previous one. The difference is that you have requested indexing here. But Google thinks that another page should have the canonical tag.

Crawled - currently not indexed

The URL has already been crawled, but not yet indexed. This can have many causes. It is best to check every single hit in the Google Search Console with the URL check.

Found - currently not indexed

Google bots have found the page, but have not crawled it. Attempting to crawl the page would have overloaded it. Google will try to crawl the page again.

Not all errors necessarily have to be fixed. But it is important that you look at each error message and then decide whether you need to take action or not.

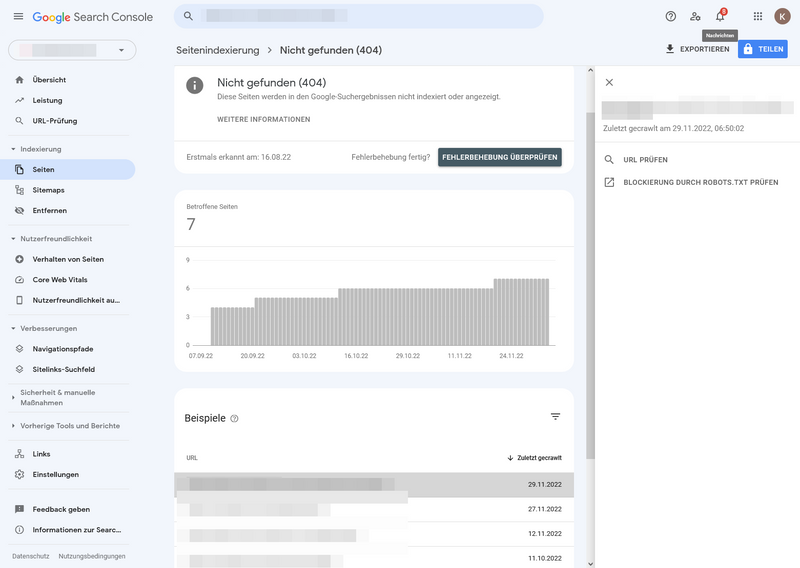

Analysis of 404 errors

404 errors are very diverse. It only means that the Google bot cannot find a page. This does not negatively affect the performance of your website. Most often, the page either no longer exists at a place that is accessible to the bot or the page no longer has any content worth mentioning. 404 errors occur frequently as your website grows and changes. You don't always have to take action and fix the error. Let's take a closer look at the reasons and solutions for 404 errors.

1. URL changed

You have changed the link of a page, but forgot to set up a redirect to the new page. You should make up for this so that visitors do not end up on the 404 page and website traffic is wasted. For WordPress there are plugins that can automatically create redirects.

2. Content changed or deleted

The page was indexed, but now there is no or little content on the page. Since the page is accessible, it is a special form of 404 error. The sent URL receives a Soft 404 error back. If the page is no longer available and you do not have the content anywhere else, configure the server to return a 404 (not found) or 410 (no longer available) response code . Try to delete all links that lead to this page. If there are also links outside your website, ask the responsible parties to remove the link.

3. URL wrong

The error here is not necessarily on your part, but maybe on the surfers. If they enter a URL incorrectly, they end up on a 404 page. The searched URL then appears in your crawling error report. For example, if you have a website for electric guitars and the surfers search for "pages" instead of "strings", they will not find what they are looking for. Unless: You know the incorrectly entered URLs and set up a redirect. It can also happen that you made a typing mistake when linking. You can easily correct this error.

4. Links in embedded content

Google bots try to follow links that are in embedded content, for example in JavaScript. These URLs are not part of your website. Since it is often not a real page that can be called up accordingly, you will receive a message in the crawling error report. You can ignore this.

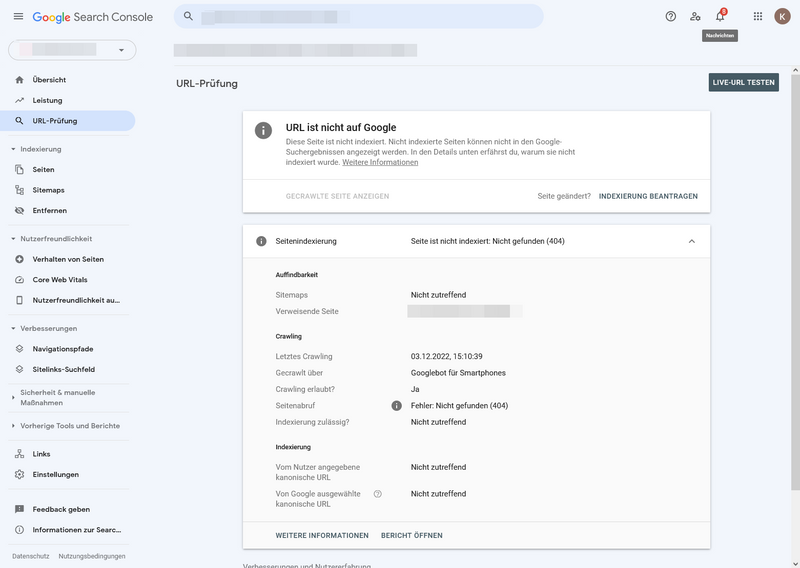

The URL Check Tool provides information about a page crawled by Google. You can test whether a URL could be indexed. In the Google Search Console, you have several ways to get to the URL check. If you want to check a page from the crawling error report, select a crawling error type and get to the error statistics with all pages on which this crawling error occurs. Clicking on a URL under "Examples" will bring up a window with the option to check the URL.

URL Check - Error Overview

After clicking on "Check URL" you will get the result of the check.

URL check report

Another option is to enter the URL directly into the search field at the top of the tool. You receive the same overview. The difference: You can also check new pages that have not yet been crawled and apply for indexing with one click. Let's have a look at the meaning of the individual sections under page indexing:

- Availability: How Google found your URL.

- Crawling: You can see if Google could crawl your page, when it was crawled and what difficulties there were.

- Indexing: Here you can see the canonical URL that you and Google have chosen for this page.

Conclusion

The Google Search Console is a very good diagnostic tool to find and fix crawling errors. Some crawling errors have little to no impact on the performance and usability of your site. Others require immediate action. Therefore, check regularly if and what crawling errors occur on your website. OMR Reviews has a guide to

Setting up the Google Search Console to make the most of this

SEO tool.

Recommended SEO Tools

You can find more recommended tools SEO-Tools on OMR Reviews and compare them. In total, we have listed over 150 SEO tools (as of December 2023) that can help you increase your organic traffic in the long term. So take a look and compare the software with the help of the verified user reviews: