Table of contents

- Why indexing is important

- How you can find out whether your website is indexed

- How can websites be indexed by search engines?

- How long does indexing take?

- Can indexing be speeded up?

- How can I deindex web pages?

- Troubleshooting: My website does not appear in the index

Indexing is the addition of web pages to an ordered register or directory, the index. That is, only the web pages that have been indexed by Google can also be found by users via the search engine. The issue of indexing is therefore a fundamental component for search engines and for you.

The index of search engines is much more complex than traditional directories. We are not dealing with a simple alphabetical or chronological overview, but with a multitude of different criteria. Google basically tries to index everything that can be found. This is done with the help of so-called crawlers. There are only two restrictions:

- Website operators do not want their own website to be indexed

- The website does not comply with the Google guidelines

Our guest author Alex Wolf shows why indexing is so important for you and the steps you can take to find out if your website is indexed.

Why indexing is important

A search engine lives from two properties:

- an extensive index of websites

- a clever algorithm to deliver the best results from the index for a search query

The second only works successfully if suitable and current pages can be found in the index. Especially in the case of news, the timeliness plays a particularly large role. Only when search engines have enough feed for the algorithm to learn, good search results can be given.

How you can find out whether your website is indexed

Google offers you two quick and simple ways to find out if websites are indexed or not. A

site query and the check via the

Google Search Console.

In the next step we explain to you in more detail how to work with these two ways.

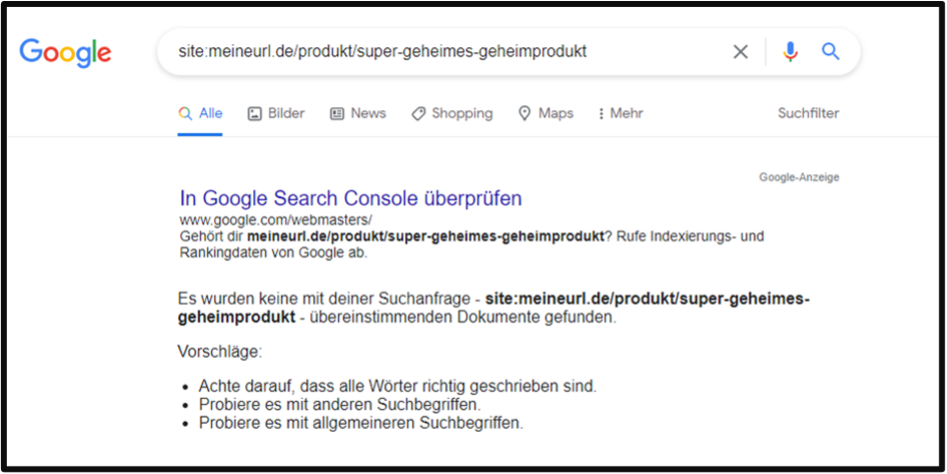

1. Way: Checking with a site query

The site query works like a normal search via the Google search engine. The search is only supplemented by a parameter and is therefore greatly restricted in order to get exactly the result you are looking for.

To check whether the website myurl.com/products/super-secret-secret-product is indexed, you can of course search for 'myurl super secret secret product'. The disadvantage of this search query is that no restriction has been made. All results that match this search query are output to you. With the preceding 'site:' parameter, only the following website is actually searched.

With the query for 'site:' followed by the URL, you are only searching for the URL in the index.

The site query can still be supplemented with other search criteria.

- site:myurl.com shoes: Delivers all subpages that contain the term 'shoes'.

- site:myurl.com intitle: Shoes: Delivered all subpages that have 'shoes' in the title.

- site:myurl.com filetype:docx: Delivers all .docx files of a website from the index. This of course also works with other file types.

- site:myurl.com inurl:trousers: Delivers all results of our website with 'trousers' in the URL.

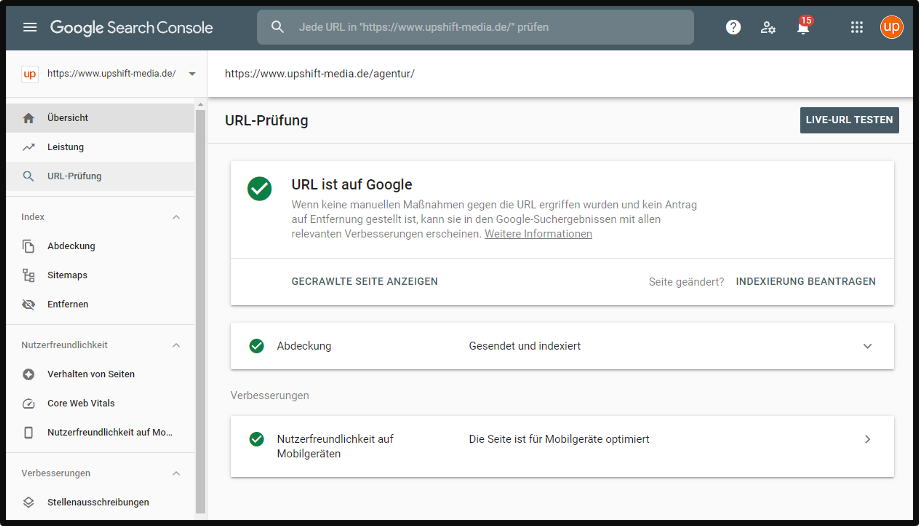

2. Way: Checking through Google Search Console

The

Google Search Console gives a complete status report on the state of indexing. However, the check with the Google Search Console requires that your own website is stored and verified there.

By entering the URL in the search field within the Google Search Console, you can query the current status of indexing.

To check the indexing status of the entire website, there is the 'Coverage' area.

In the 'Coverage' area of the Google Search Console, we see the indexed pages as well as the pages we exclude from indexing. The indexed pages are divided into two statuses in the Google Search Console:

- Valid page(s) with warnings: They do appear in the search engine's index, but there are discrepancies for the search engine. Discrepancies can, for example, arise if the indexed page does not appear in the sitemap or if crawling of the page has been prevented by the robots.txt.

- Valid: All regular pages that could be indexed without errors or discrepancies fall under valid pages.

How can websites be indexed by search engines?

Pages can be indexed in two ways - via a passive or an active approach. Both approaches are officially supported by Google, but of course there is no guarantee of indexing.

The passive approach

For the passive approach, you as a website operator do not have to take any measures of your own. Google tries to find and index the website. The prerequisite for this is that the website is not blocked for crawlers and ideally has incoming links from internal or external sources. You can also read out whether the website is readable for crawlers and the indexing status with the SEO tool Ryte.

With

Ryte you get a complete overview of the crawlability and indexing status of the entire website.

The passive approach can lead to problems if there are only few or no incoming links to the website. It is also possible that content will be indexed much later.

The active approach

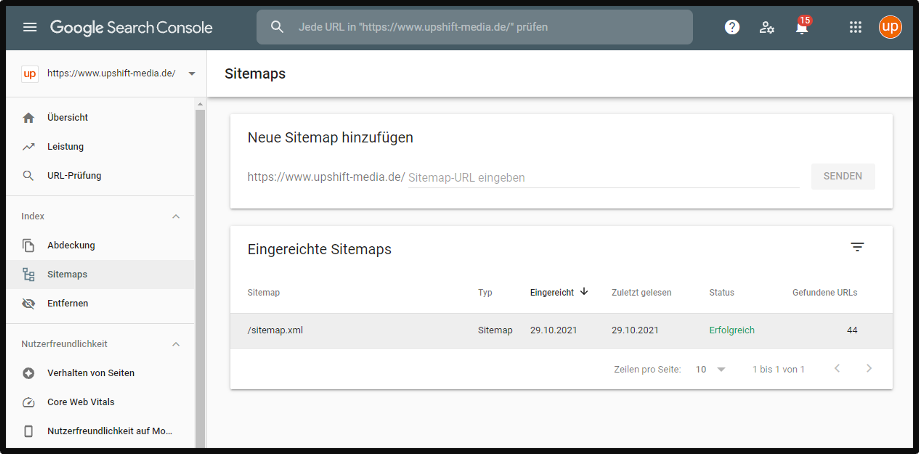

With the active approach, a list with URLs, a so-called sitemap, is provided to the search engine. The sitemap is usually hosted in a place on the domain that search engines can access. In addition, the location of the sitemap can be specified in the robots.txt file.

With the help of the sitemap, search engines can recognise changes to the website more quickly, as the sitemap additionally has a timestamp with the date and time of the last change.

In addition, the sitemap can also be submitted via the Google Search Console. To do this, select the item 'Sitemaps' in the left menu and specify the path to the sitemap there. Of course, you can also submit several sitemaps, for example for different languages.

Submit a sitemap via the Google Search Console

In the Google Search Console, you can also check whether individual URLs / individual websites have already been indexed by Google. To do this, either enter the URL to be examined into the upper search field or click on 'URL check' in the sidebar and then enter the URL. Google first checks the indexing status and then offers you the possibility of applying for indexing (if this has not yet been done).

In general, the chance of timely indexing is higher with the active approach than with the passive approach.

How long does indexing take?

To put it in the words of John Müller (Webmaster Trends Analyst at Google): 'It depends.'. The time until a website is indexed depends on how often the website is crawled, how often there are changes on the website and what the readability for search engines is.

In the first step, the website is only crawled. This can happen within a few days. Then the crawled contents are indexed. This process can actually take several weeks. To then have your web pages displayed on the first page on Google, you need good

Search Engine Optimisation (SEO), a lot of work, diligence and patience.

Can indexing be speeded up?

Long story short: Indexing cannot be sped up. Although it is possible to give individual URLs a priority in the sitemap, this is no longer processed by Google. Also, multiple submissions via the Google Search Console do not speed up the indexing process. Patience is really the only thing that helps.

How can I deindex web pages?

There are several methods for deindexing already indexed websites. On the one hand, this can be achieved via the robots meta information on the website. By stating <meta name='robots' content='noindex'>, the website is either not included in the index in the first place or deindexed when crawled again.

If a webpage is no longer available and it therefore either gives a 404 message or redirects via 301 / 302, the corresponding webpage will automatically be deindexed after a while.

Important: The inclusion of a webpage in the robot.txt does not mean that the webpage disappears from the index or is not indexed at all. The only effect is that the webpage is not crawled. Due to incoming links, the webpage can still be indexed. Excluding the crawler does not mean that a website is not indexed.

Troubleshooting: My website does not appear in the index

If you cannot find your own websites via the search engine, this can have different reasons. To quickly exclude that the problem lies with the website itself, we have a checklist with important questions ready for you:

1. Is the website accessible from outside via the URL?

Check with various end devices whether the website can also be reached outside your own network. Does the website perhaps return a 404 status code?

2. Is a redirect active?

Check if there is an active 301 or 302 redirect and remove it.

3. Check the meta robots information of your websites

If the instruction 'noindex' or 'none' appears in the meta robots tags, indexing is suppressed.

4. Does a canonical tag point to another website?A canonical tag, also known as a Canonical Tag, usually points to the original content of a webpage. The instruction helps search engines deal with

duplicate content.

5. Is the crawl blocked by the robots.txt?

If the webpage or directory is not allowed to be crawled, this will significantly impede indexing and it can therefore be delayed or it may not take place at all.

Tools, such as

Screaming Frog SEO Spider, give you a quick overview of the stored redirects and meta information as well as the accessibility of the webpage.

Recommended SEO Tools

You can find more recommended tools SEO-Tools on OMR Reviews and compare them. In total, we have listed over 150 SEO tools (as of December 2023) that can help you increase your organic traffic in the long term. So take a look and compare the software with the help of the verified user reviews: